In the realm of industrial automation, robots are taking center stage, but mastering complex tasks like object grasping and assembly has been a hurdle.

However, a recent breakthrough by researchers at Qingdao University of Technology is poised to change that narrative.

Unlocking the Potential of Autonomous Robotics

Semi-autonomous and autonomous robots are making waves across various industries, from manufacturing to logistics.

Their ability to assist human workers and streamline processes is undeniable.

But what if they could do more than just assist? What if they could learn and adapt on their own?

The Challenge of Industrial Automation

Industrial robots have long been hailed as the future of manufacturing, promising efficiency and precision.

Yet, their reliance on extensive programming for specific tasks has been a bottleneck.

This painstaking process not only consumes time but also limits flexibility.

A Game-Changing Solution

Enter deep reinforcement learning, a cutting-edge approach that empowers robots to learn through trial and error, much like humans do.

Researchers at Qingdao University of Technology have leveraged this technique to revolutionize industrial robotics.

The Breakthrough Research

Published in The International Journal of Advanced Manufacturing Technology, the research by Chengjun Chen, Hao Zhang, and their team introduces novel deep learning algorithms tailored for industrial robots.

These algorithms expedite the training process for grasping and assembly tasks, paving the way for faster adaptation and deployment.

Deep Learning for Rapid Skill Acquisition

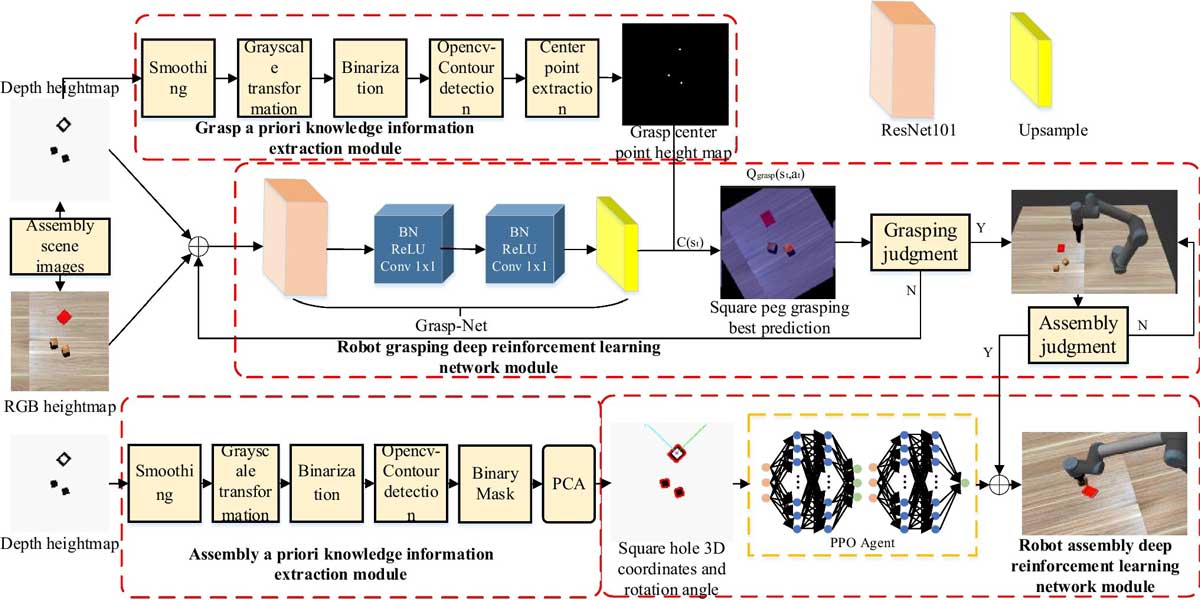

The researchers devised a deep Q-learning-based algorithm for grasping and a PPO-based algorithm for assembly.

These algorithms are not just about speed; they’re about efficiency.

By incorporating a priori knowledge, the robots optimize their actions, reducing training time and interaction data.

Assessing Robot Proficiency

To gauge the effectiveness of their training framework, the team developed reward functions tailored to grasping and assembly constraints.

These functions serve as benchmarks, enabling precise evaluation of the robots’ skills.

Real-World Validation

Testing their approach in both simulated and physical environments, the researchers achieved remarkable success rates.

With a 90% average grasping success rate and an assembly success rate of up to 86.7% in simulations, and 73.3% in physical setups, the results speak volumes.

Future Directions and Enhancements

While the initial findings are promising, the researchers are not resting on their laurels.

They envision further enhancements, including improved hole detection accuracy and domain randomization, to bridge the gap between simulation and reality.

Conclusion

With deep reinforcement learning at its core, the future of industrial robotics looks brighter than ever.

Thanks to the pioneering work of researchers like Chen and Zhang, we’re on the brink of a new era where robots not only assist but also learn and evolve alongside their human counterparts.

FAQs

Unlike traditional programming, where robots are explicitly coded for specific tasks, deep reinforcement learning allows robots to learn and adapt through experience, similar to how humans learn.

The techniques developed in this research have implications beyond manufacturing, such as in healthcare, agriculture, and even space exploration, where autonomous robots can perform complex tasks in dynamic environments.

While the success rates are impressive, there are still challenges to overcome, such as improving the robustness of the algorithms in unstructured environments and reducing errors in real-world applications.

More information: Chengjun Chen et al, Robot autonomous grasping and assembly skill learning based on deep reinforcement learning, The International Journal of Advanced Manufacturing Technology (2024). DOI: 10.1007/s00170-024-13004-0